Hi Sulaiman,

Based on the results you shared, nn-UNet overall performed the best on cross validation for most of the settings (supervisied and semi-supervised). However, on public LB UNet-semi-supervised achieved the best performance. Is there any explanation for this?

According to the Open Development Phase - Validation and Tuning Leaderboard, as of now, the baseline nnDetection (semi-supervised) seems to be the overall best model (considering both detection + diagnosis performance). It's true that the baseline U-Net (semi-supervised) seems to marginally outperform the baseline nnU-Net (semi-supervised) on that leaderboard. However it's worth considering that this ranking was facilitated using 100 cases only. On the final hidden testing cohort of 1000 cases (including cases from an unseen external center), these models may rank in a completely different order. Perhaps it’s best to look at performance across both sets of data (cross-validation using 1500 cases + held-out validation on the leaderboard using 100 cases) to inform your model development cycle, but we leave such decisions completely up to the participants.

Observing a substantial difference in performance between 5-fold cross-validation metrics using the training dataset of 1500 cases, and performance metrics on the leaderboard using the hidden validation cohort of 100 cases, is to be expected. We believe this is due to the factors discussed here.

Also, I treid to reproduce the baseline results locally for both semi-UNet and semi-nnUNet, however the cross validation is par lower than your reported results.

Not sure, if we are missing something here.

Assuming that you're using the same number of cases [1295 cases with human annotations (supervised) or 1295 cases with human annotations + 205 cases with AI annotations (semi-supervised)] preprocessed the same way; and have trained (default command with the same number of epochs, data augmentation, hyperparameters, model selection, etc.), 5-fold cross-validated (same splits as provided) and ensembled (using member models from all 5 folds) the baseline AI models the exact same way as indicated in the latest iteration of picai_baseline, your performance during cross-validation and on the leaderboard should be similar to that of ours.

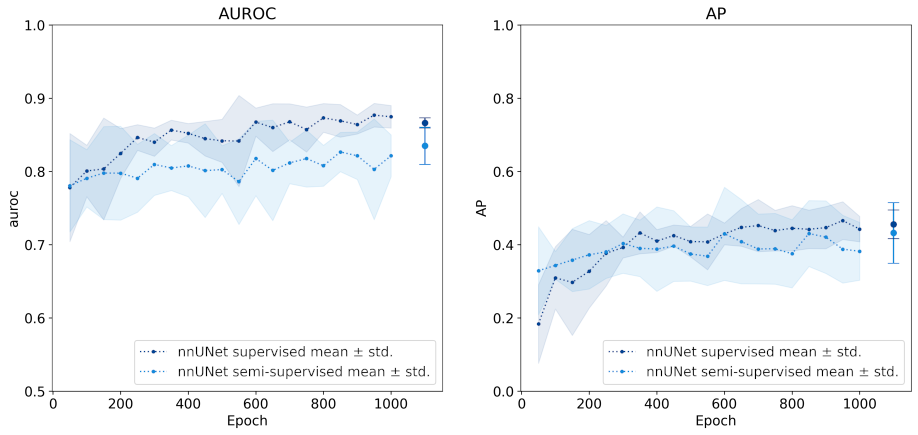

Deviations may still exist owing to the stochasticity of optimizing DL models at train-time —due to which, the same AI architecture, trained on the same data, for the same number of training steps, can typically exhibit slightly different performance each time (Frankle et al., 2019). Some AI models + training methods are more susceptible to this form of performance instability across training runs, than others (also depends on the task + dataset + supervision/annotations, of course). As we have only trained a single instance of each of our baseline AI models thus far, it's difficult to specifically comment on their expected variance in performance across training runs. During our final performance estimation of the top 5 teams' algorithms on the hidden testing cohort of 1000 cases (to determine the winner of the challenge), this factor will be accounted for [as detailed in Item 28 (pg 15) of our study protocol].

Hope this helps.