Hi Z. Huang,

Great to hear most of the steps went smoothly!

Our baseline solutions use the three axial sequences, which are available for all cases:

1. Axial T2-weighted imaging (T2W): [patient_id]_[study_id]_t2w.mha

2. Axial computed high b-value (≥ 1000 s/mm2) diffusion-weighted imaging (DWI): [patient_id]_[study_id]_hbv.mha

3. Axial apparent diffusion coefficient maps (ADC): [patient_id]_[study_id]_adc.mha

After resampling the high b-value and apparent diffusion coefficient maps to the spatial resolution of the axial T2-weighted image, each voxel corresponds to the same physical part of the prostate/body. This means the images line up when they are concatenated and inputted to an architecture like the U-Net (up to registration errors). As such, each of the "matrix elements" that make up the network's input, contain the intensity values of the three different sequences from the same physical location. This is comparable to how the red, green and blue channels of a photo produce a color image, as long as they are not moved with respect to each other.

The remaining two sequences (which are available for most cases, but not all), have a different physical orientation to the three above, and are not used by our baseline solution:

4. Sagittal T2-weighted imaging: [patient_id]_[study_id]_sag.mha

5. Coronal T2-weighted imaging:[patient_id]_[study_id]_cor.mha

Simply concatenating these images to the axial images would mean that the intensity values in a certain "matrix element" does not correspond to the same physical location in each of the five sequences. As such, including these differently oriented sequences most likely requires a custom architecture, which we did not use for the baseline algorithms. If you would like to learn more about this, you can check out for example:

Taiping Qu, et. al, "M3Net: A multi-scale multi-view framework for multi-phase pancreas segmentation based on cross-phase non-local attention", Medical Image Analysis

The choice to include the three axial sequences is made implicitly in prepare_data.py#L73: generate_mha2nnunet_settings(...)

This function is defined within the picai_prep repository. Multi-view preprocessing (so axial, sagittal and coronal) is not supported by picai_prep, because there is no clear "best" way to do this. We leave it to the participants to implement this in conjunction with the architectural choices that come with it.

The choice for {'0': 'T2W', '1': 'CT', '2': 'HBV'} in the dataset.json does the following:

- For the first sequence (which is the axial T2-weighted scan), use instance-wise z-score normalisation

- For the second sequence (which is the axial ADC scan), use dataset-wise z-score normalisation

- For the third sequence (which is the axial high b-value scan), use instance-wise z-score normalisation

nnU-Net uses CT to indicate a sequence should be normalised with dataset-wise mean and variance, rather than instance-wise mean and variance. We did this, because the values in an ADC scan are diagnostically relevant. Please see the nnU-Net paper for more details, including their choice to use foreground pixels to determine dataset-wise normalisation statistics and 0.5% and 99.5% percentiles to clip values when performing dataset-wise z-score normalisation.

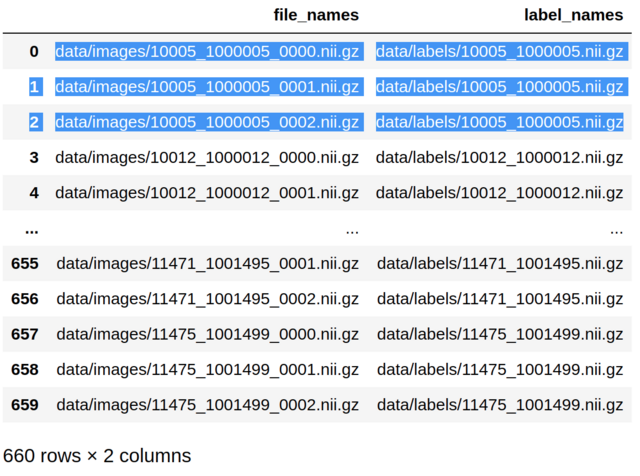

We got the same error as you when training the baseline algorithms. The error is caused by a single case which has a peculiar resampling effect. The case in question, 11475_1001499, is resampled from a voxel spacing of (0.56, 0.56, 3) to (0.5, 0.5, 3). During this resampling, the annotation somehow breaks into two components, as indicated by the error message (from the conversion log):

AssertionError: Label has changed due to resampling/other errors for 11475_1001499! Have 1 -> 2 isolated ground truth lesions

When resampling, picai_prep checks the number of non-touching components, and throws an error when this number changes. We do not have a recommended way of handling this one case. Personally, we excluded this case when training the U-Net baseline.

Hope this helps,

Joeran

P.S.: we have now released the AI-derived annotations for all 1500 cases (see picai_labels). You can include those to leverage the remaining 205 cases with csPCa as well. Our cross-validation results were shaky, but we noticed substantial performance improvements over the supervised baseline models on the Open Development Phase - Validation and Tuning Leaderboard. Maybe this annotation can also solve the issue with case 11475_1001499.

You can use prepare_data_semi_supervised.py to prepare your data when including the AI-derived annotations. This script includes the combination of human-expert and AI-derived annotations, has updated task name and paths. If you add if "11475_1001499" in fn: continue to prepare_data_semi_supervised.py#L79, you skip the human-expert annotated annotation in favor of the AI-derived one.